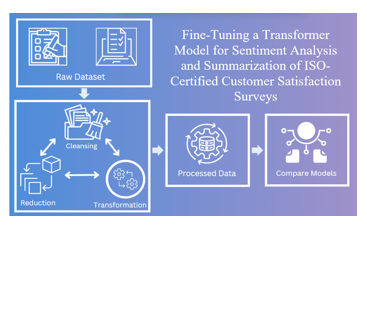

Fine-Tuning a Transformer Model for Sentiment Analysis and Summarization of ISO-Certified Customer Satisfaction Surveys

DOI:

https://doi.org/10.69478/BEST2025v1n2a025Keywords:

Natural language processing, BERT, LSTM, Logistic Regression, Sentiment AnalysisAbstract

Understanding customer sentiment is an essential part of improving service quality, especially for organizations that rely on regular feedback, such as ISO-certified institutions. While structured survey questions provide valuable data, it’s often the open-ended comments that offer the most insight into customer experiences. However, analyzing large volumes of written feedback manually can be time-consuming and inconsistent. This study explores the use of machine learning and deep learning models to automate sentiment analysis of the responses gathered from customer satisfaction surveys. The research focused on comparing the performance of three models (Bidirectional Encoder Representations from Transformers), LSTM (Long Short-Term Memory), and Logistic Regression. To gain a clearer understanding of the customers' comments and suggestions, the models will be used to analyze their feedback. The dataset then went through a series of steps that included handling missing values and cleaning the comments to ensure quality output. After the data pre-processing is the results. Going through the outcomes, BERT significantly outperformed the other models, achieving the highest overall accuracy of 84%, while LSTM followed with 78%, and Logistic Regression lagged behind at 39%. These findings highlight the value of using transformer-based models like BERT for understanding complex, unstructured customer feedback and suggest that such models can play a meaningful role in decision-making and service improvement.

References

H. Taherdoost, “Evaluation of Customer Satisfaction in the Digital Environment: Development of a Survey Instrument,” in Digital Transformation and Innovative Services for Business and Learning, K. Sandhu (Ed.), Hershey, PA: IGI Global Scientific Publishing, 2020, 195-222, https://doi.org/10.4018/978-1-7998-5175-2.ch011.

R. Németh, J. Koltai, “Natural Language Processing,” Intersections, vol. 9, no. 1, April 2023, pp. 5-22, https://doi.org/10.17356/ieejsp.v9i1.871.

G. Y. Lee, L. Alzamil, B. Doskenov, A. Termehchy, “A Survey on Data Cleaning Methods for Improved Machine Learning Model Performance,” arXiv: Databases, September 2021, https://doi.org/10.48550/arXiv.2109.07127.

F. R. Ruggeri, “AI-Driven Data Analytics Assessing Methods to Localize Preexisting Natural Language-Processing Data,” in IRC-SET 2021, H. Guo, H. Ren, V. Wang, E. G. Chekole, U. Lakshmanan (Eds), Springer, Singapore, august 2022, https://doi.org/10.1007/978-981-16-9869-9_15.

A. Ravikumar, H. Sriraman, “A Deep Understanding of Long Short-Term Memory for Solving Vanishing Error Problem,” in Machine Learning Algorithms Using Scikit and TensorFlow Environments, IGI Global, December 2023, pp. 74-90, https://doi.org/10.4018/978-1-6684-8531-6.ch004.

M. Hussain, M. Naseer, “Comparative Analysis of Logistic Regression, LSTM, and Bi-LSTM Models for Sentiment Analysis on IMDB Movie Reviews,” Journal of artificial in-telligence and computing, vol. 2, no. 1, June 2024, pp. 1-8, https://doi.org/10.57041/6t2gfw23.

Downloads

Published

Issue

Section

Categories

License

Copyright (c) 2025 Jayson A. Daluyon, Ronel J. Bilog (Author)

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.